Support &

Documentation

This FAQ should help HPC2N users answer common questions regarding:

Q: I have forgotten my user password. What should I do?

A: Go here to reset your password. For this to work you need a SUPR account, and your HPC2N user account have to be connected to it.

Q: Where can I see how much CPU time my project used?

A: You can use command projinfo -p <project_ID> -v. For more information see our projectinfo webpage.

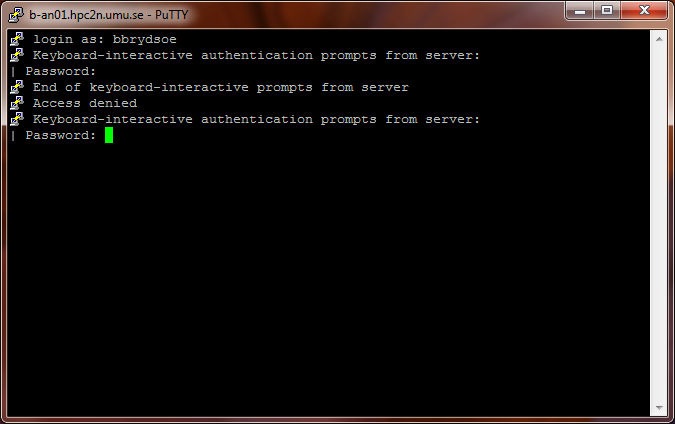

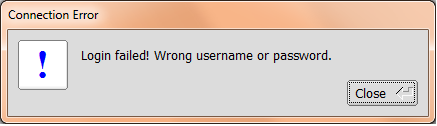

Q: What are typical errors when typing a wrong password during login?

A:

ssh: connect to host kebnekaise.hpc2n.umu.se port 22: Network is unreachable

This usually means that you have typed the wrong password or username enough times that your IP address has been blocked. You can either wait for 24 hours until it automatically unblocks or email us with your IP so we can unblock it.

Q: What are other common errors at attempted login and what do they mean?

A:

ssh: Could not resolve hostname kebnekaise.hpc2n.umu.se: Name or service not known

This means there was some network error

Q: What are common errors when resetting password through SUPR?

A:

When i am trying to reset my password I am getting this error message.

Your user account has been deactivated at HPC2N. .Please contact support@hpc2n.umu.se to reactivare your user account. Include your username at HPC2N in the mail.

This means you are not currently a member of a project. You either need to apply for one or have your PI add you to a project. You can find more information about this here: Apply for HPC resources of a new project.

Q: What is the CPU Architecture of the cluster?

A: Look at the Kebnekaise hardware page.

Q: Why can't I login with SSH Key-Based Authentication?

A: This method of authentification is explicitly disabled on HPC2N's systems. If you want to use passwordless authentification, you can access HPC2N's systems through GSSAPI-aware SSH clients. GSSAPI allows you to type your password once when obtaining your Kerberos ticket, and while that ticket is valid you don't have to retype your password. There is a little more information about this on our login/password page.

Q: Can I access the compute nodes with ssh?

A: No, we do not allow this, mainly since nodes can be shared by different user's jobs.

Q: What is the maximum time a job can run?

A: A job can run for up to the number of allocated core hours per month divided by five. However, the maximum number of (walltime) hours any job can run is 168 (or 7 days). For more information see our batch system webpage.

Q: Can I log in to computation nodes to see how my jobs are running?

A: We don't allow users to log in to computation nodes.

Q: What combination of nodes and cores should I use for a multi-threaded application?

A: At HPC2N we only allow processes of one user to run on a particular node. That way we prevent a situation in which a user with multi-threaded application (which runs as one process, and is thus treated by the batch system, but actually uses multiple processors) competes with other users' ordinary processes. Supposing you want to run m multi-threaded processes on n processors you need to make sure that each process is allocated to exactly one node:

For more complex configurations please contact HPC2N support: support@hpc2n.umu.se.

Q: When submitting a job without specifying a project account, I get an error:

sbatch: error: You must supply an account (-A ...) sbatch: error: Batch job submission failed: Unspecified error

A: There is no default project. You must specify a valid project in your submit file (using the #SBATCH -A directive).

To apply for a project please see rules described in SUPR under the rounds, or on this page. A small level request should be sent directly to HPC2N by the Principal Investigator (PI). You can find more information here.

Q: I got "Unable to allocate resources: Job violates accounting/QOS policy" when I submit a job.

A: This is most likely because the project you are trying to use has expired.

You can check the status of your project with: projinfo -p <project_id>

If you got a new project update your submit file or else you can apply for a new one.

Q: I submitted my job, and when I look at the status, it says "Nodes required for job are DOWN, DRAINED or reserved for jobs in higher priority partitions".

A: This message simply means that your job is in queue, waiting for nodes/cores to become available. The job will start when there are free nodes/cores.

Q: My job is pending and I got "Reason=AssociationResourceLimit" or "Reason=AssocMaxCpuMinutesPerJobLimit"

A: This is because your currently running jobs allocates your entire footprint allowance for your project. The job will start when enough of your running jobs have finished that you are below the limit.

Another possibility is that your job is requesting more resources (more core hours) than your allocation permits. Remember: <cores requested> x <walltime> = <core hours you are requesting>. NOTE: On Kebnekaise, if you are asking for more than 28 cores, you are accounted for a whole number of nodes, rounded up (Ex. 29 cores -> 2 nodes).

Q: I am used to using the PBS batch system. What are the main differences between that and SLURM (which is used at HPC2N)?

A: There are a number of differences between SLURM and more common systems like PBS. The most important ones are:

Comparison of some common commands in SLURM and in PBS / Maui.

| Action | Slurm | PBS | Maui |

|---|---|---|---|

| Get information about the job | scontrol show job <jobid> | qstat -f <jobid> | checkjob |

| Display the queue information | squeue | qstat | showq |

| Delete a job | scancel <jobid> | qdel | |

| Submit a job | srun/sbatch/salloc | qsub | |

| Display how many processors are currently free | showbf | ||

| Display the expected start time for a job | squeue --start | showstart <jobid> | |

| Display information about available queues/partitions | sinfo/sshare | qstat -Qf |

Q: How can I control affinity for MPI tasks and OpenMP threads?

A: You can use mpirun's binding options or srun's --cpu_bind option to control the mpi task placement, or hwloc-bind (from the hwloc module) or numactl.

Q: I accidentally deleted a file. How do I restore it?

A: Your home directory ($HOME) and subdirectories of it are backed up nightly. To request retrieval of files you need to contact support@hpc2n.umu.se. Files removed >30 days ago are irretrivably lost.

Q: I need to use a specific compiler version with MPI. Which modules should I add?

A: Add the wanted compiler toolchain, with MPI (foss, intel, etc. See our "Installed compilers" page for more information).

For example:

ml foss

or

ml intel

Read more about modules here.

Q: Why does my compilation fail with: "*** Subscription: Unable to find a server."?

A: The above message occurs when all of our PathScale compiler licenses are in use. You have to try again after a while (ca 5-10 minutes).

Q: Can I disable usage of Infiniband by OpenMPI?

A: Use parameter -mca btl '^openib' with mpiexec. Keep in mind that the option is for testing purposes only as your communication would otherwise interfere with other gigabit Ethernet traffic (especially file system traffic).

Q: How do I increase the stack size of an OpenMP thread when running a PathScale(TM) Fortran program?

A: Add export PSC_OMP_STACK_SIZE=128m into your submit file to set the per thread value to 128MB.

Q: How can I get access to restrictively licensed software?

A: We need to get a confirmation from a license holder that you can use the software along with a license number and/or complete license name.

Q: Should I use mpirun or srun

A: Both should work interchangeably, though mpirun may not always work with standard input (mpirun prog < file) and Intel MPI.